Proactive Hearing Assistants that Isolate Egocentric Conversations

1Paul G. Allen School of Computer Science & Engineering, University of Washington

2Hearvana AI

*Equal contribution

EMNLP 2025 Main Conference

Abstract

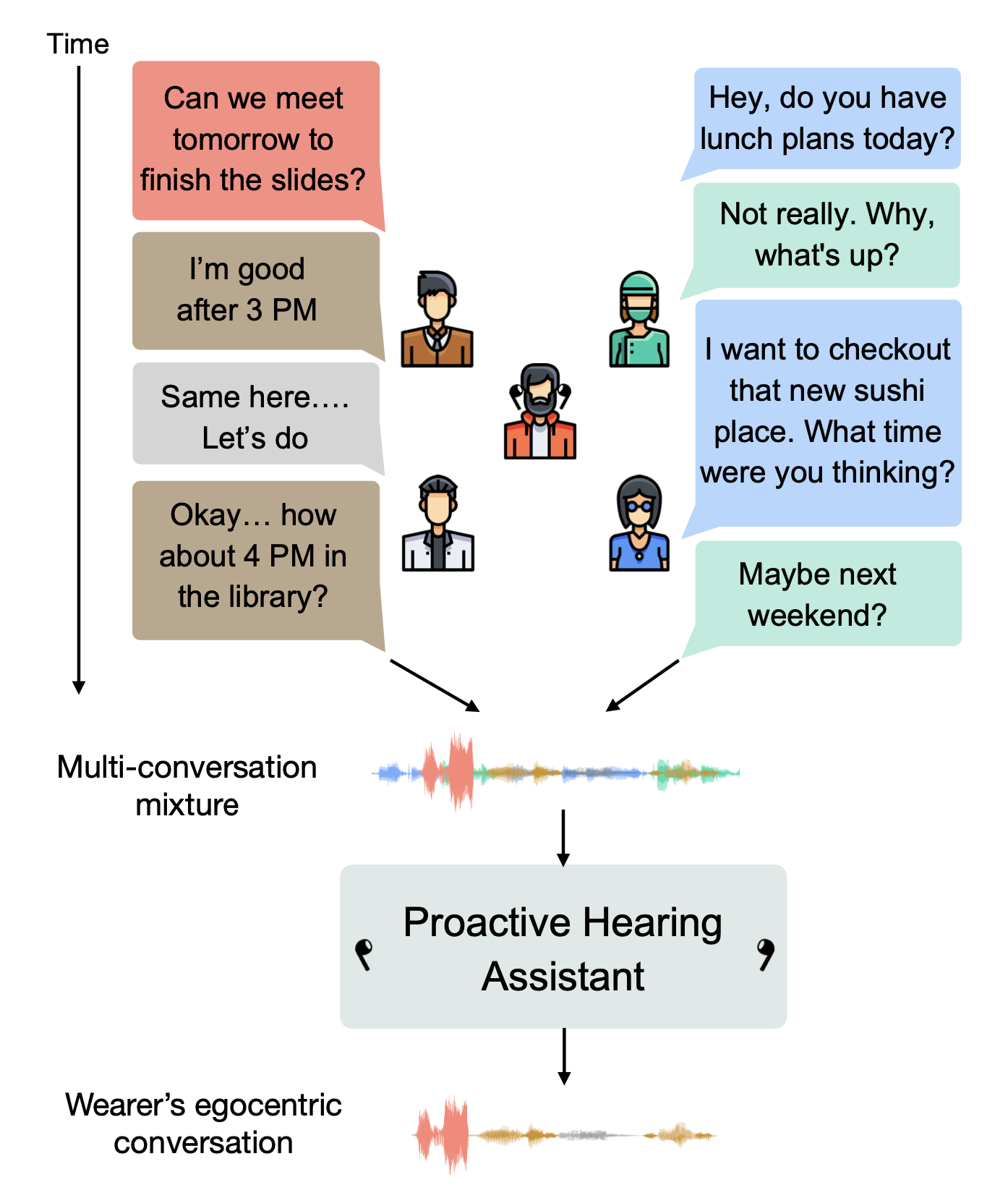

We introduce proactive hearing assistants that automatically identify and separate the wearer’s conversation partners, without requiring explicit prompts. Our system operates on egocentric binaural audio and uses the wearer’s self-speech as an anchor, leveraging turn-taking behavior and dialogue dynamics to infer conversational partners and suppress others. To enable real-time, on-device operation, we propose a dual-model architecture: a lightweight streaming model runs every 12.5 ms for low-latency extraction of the conversation partners, while a slower model runs less frequently to capture longer-range conversational dynamics. Results on real-world 2- and 3-speaker conversation test sets, collected with binaural egocentric hardware from 11 participants totaling 6.8 hours, show generalization in identifying and isolating conversational partners in multi-conversation settings. Our work marks a step toward hearing assistants that adapt proactively to conversational dynamics and engagement.

Demo 1 with system OFF

Demo 1 with system ON

Demo 2 with system OFF

Demo 2 with system ON

Demo 3

Demo 4

Audio Samples

Compare input mixtures, our system outputs, and ground truth clean recordings.

BibTeX

@inproceedings{hu2025proactive,

title={Proactive Hearing Assistants that Isolate Egocentric Conversations},

author={Hu, Guilin and Itani, Malek and Chen, Tuochao and Gollakota, Shyamnath},

booktitle={Proceedings of the 2025 Conference on Empirical Methods in Natural Language Processing},

pages={25377--25394},

year={2025}

}